The preceding chapters covered the concept of virtualization, emphasizing creating and managing virtual machines using KVM. This chapter will introduce a related technology in the form of Linux Containers. While there are some similarities between virtual machines and containers, key differences will be outlined in this chapter, along with an introduction to the concepts and advantages of Linux Containers. The chapter will also overview some CentOS Stream container management tools. Once the basics of containers have been covered in this chapter, the next chapter will work through some practical examples of creating and running containers on CentOS 9.

Linux Containers and Kernel Sharing

In simple terms, Linux containers are a lightweight alternative to virtualization. A virtual machine contains and runs the entire guest operating system in a virtualized environment. The virtual machine, in turn, runs on top of an environment such as a hypervisor that manages access to the physical resources of the host system.

Containers work by using a concept referred to as kernel sharing, which takes advantage of the architectural design of Linux and UNIX-based operating systems.

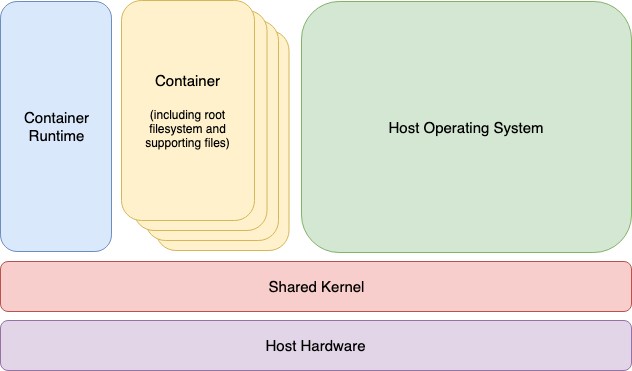

To understand how kernel sharing and containers work, it helps first to understand the two main components of Linux or UNIX operating systems. At the core of the operating system is the kernel. In simple terms, the kernel handles all the interactions between the operating system and the physical hardware. The second key component is the root file system which contains all the libraries, files, and utilities necessary for the operating system to function. Taking advantage of this structure, containers each have their own root file system but share the host operating system’s kernel. This structure is illustrated in the architectural diagram in Figure 27-1 below:

This type of resource sharing is made possible by the ability of the kernel to dynamically change the current root file system (a concept known as change root or chroot) to a different root file system without having to reboot the entire system. Linux containers are essentially an extension of this capability combined with a container runtime, the responsibility of which is to provide an interface for executing and managing the containers on the host system. Several container runtimes are available, including Docker, lxd, containerd, and CRI-O. Earlier versions of CentOS used Docker by default, but Podman has supplanted this as the default in CentOS 9.

|

You are reading a sample chapter from CentOS Stream 9 Essentials. Buy the full book now in eBook or Print format.

Full book includes 34 chapters and 290 pages. Learn more. |

Container Uses and Advantages

The main advantage of containers is that they require considerably less resource overhead than virtualization allowing many container instances to be run simultaneously on a single server. They can be started and stopped rapidly and efficiently in response to demand levels. In addition, containers run natively on the host system providing a level of performance that a virtual machine cannot match.

Containers are also highly portable and can be easily migrated between systems. Combined with a container management system such as Docker, OpenShift, and Kubernetes, it is possible to deploy and manage containers on a vast scale spanning multiple servers and cloud platforms, potentially running thousands of containers.

Containers are frequently used to create lightweight execution environments for applications. In this scenario, each container provides an isolated environment containing the application together with all of the runtime and supporting files required by that application to run. The container can then be deployed to any other compatible host system that supports container execution and runs without any concerns that the target system may not have the necessary runtime configuration for the application – all of the application’s dependencies are already in the container.

Containers are also helpful when bridging the gap between development and production environments. By performing development and QA work in containers, they can be passed to production and launched safely because the applications run in the same container environments in which they were developed and tested.

Containers also promote a modular approach to deploying large and complex solutions. Instead of developing applications as single monolithic entities, containers can be used to design applications as groups of interacting modules, each running in a separate container.

|

You are reading a sample chapter from CentOS Stream 9 Essentials. Buy the full book now in eBook or Print format.

Full book includes 34 chapters and 290 pages. Learn more. |

One possible drawback of containers is that the guest operating systems must be compatible with the shared kernel version. It is not, for example, possible to run Microsoft Windows in a container on a Linux system. Nor is it possible for a Linux guest system designed for the 2.6 version of the kernel to share a 2.4 version kernel. These requirements are not, however, what containers were designed for. Rather than being seen as limitations, these restrictions should be considered some of the key advantages of containers in providing a simple, scalable, and reliable deployment platform.

CentOS 9 Container Tools

CentOS Stream 9 provides several tools for creating, inspecting, and managing containers. The main tools are as follows:

- buildah – A command-line tool for building container images.

- podman – A command-line based container runtime and management tool. Performs tasks such as downloading container images from remote registries and inspecting, starting, and stopping images.

- skopeo – A command-line utility used to convert container images, copy images between registries and inspect images stored in registries without downloading them.

- runc – A lightweight container runtime for launching and running containers from the command line.

- OpenShift – An enterprise-level container application management platform consisting of command-line and web-based tools.

All of the above tools comply with the Open Container Initiative (OCI), a set of specifications designed to ensure that containers conform to the same standards between competing tools and platforms.

The CentOS Container Registry

Although CentOS Stream 9 has a set of tools designed to be used in place of those provided by Docker, those tools still need access to CentOS images for use when building containers. For this purpose, a set of CentOS Stream container images is hosted on a repository located on Red Hat’s Quay.io website at the following URL:

https://quay.io/repository/centos/centos?tab=tags

|

You are reading a sample chapter from CentOS Stream 9 Essentials. Buy the full book now in eBook or Print format.

Full book includes 34 chapters and 290 pages. Learn more. |

In addition to downloading (referred to as “pulling” in container terminology) container images from Quay.io and other third-party host registries, you can also use registries to store your own images. This can be achieved by hosting your own registry or using existing services such as Quay.io, Docker, Amazon AWS, Google Cloud, Microsoft Azure, and IBM Cloud, to name a few options.

Container Networking

By default, containers are connected to a network using a Container Networking Interface (CNI) bridged network stack. In the bridged configuration, all the containers running on a server belong to the same subnet and, as such, can communicate with each other. The containers are also connected to the external network by bridging the host system’s network connection. Similarly, the host can access the containers via a virtual network interface (usually named podman0) which will have been created as part of the container tool installation.

Summary

Linux Containers offer a lightweight alternative to virtualization and take advantage of the structure of the Linux and Unix operating systems. Linux Containers share the host operating system’s kernel, with each container having its own root file system containing the files, libraries, and applications. As a result, containers are highly efficient and scalable and provide an ideal platform for building and deploying modular enterprise-level solutions. In addition, several tools and platforms are available for building, deploying, and managing containers, including third-party solutions and those provided by Red Hat.